That’s Irrelevant!

In her book, Critical Thinking: An Appeal To Reason, Peg Tittle has an interesting and useful way of organizing 15 logical fallacies. Simply put, they’re all irrelevant to the assessment of whether an argument is true or not. Using Tittle’s guidelines, we can quickly sort out what we need to pay attention to and what we can safely ignore.

In her book, Critical Thinking: An Appeal To Reason, Peg Tittle has an interesting and useful way of organizing 15 logical fallacies. Simply put, they’re all irrelevant to the assessment of whether an argument is true or not. Using Tittle’s guidelines, we can quickly sort out what we need to pay attention to and what we can safely ignore.

Though these fallacies are irrelevant to truth, they are very relevant to persuasion. Critical thinking is about discovering the truth; it’s about the present and the past. Persuasion is about the future, where truth has yet to be established. Critical thinking helps us decide what we can be certain of. Persuasion helps us make good choices when we’re uncertain. Critical thinking is about truth; persuasion is about choice. What’s poison to one is often catnip to the other.

With that thought in mind, let’s take a look at Tittle’s 15 irrelevant fallacies. If someone tosses one of these at you in a debate, your response is simple: “That’s irrelevant.”

- Ad hominem: character– the person who created the argument is evil; therefore the argument is unacceptable. (Or the reverse).

- Ad hominem: tu quoque– the person making the argument doesn’t practice what she preaches. You say, it’s wrong to hunt but you eat meat.

- Ad hominem: poisoning the well— the person making the argument has something to gain. He’s not disinterested. Before you listen to my opponent, I would like to remind you that he stands to profit handsomely if you approve this proposal.

- Genetic fallacy– considering the origin, not the argument. The idea came to you in a dream. That’s not acceptable.

- Inappropriate standard: authority– An authority may claim to be an expert but experts are biased in predictable ways.

- Inappropriate standard: tradition – we accept something because we have traditionally accepted it. But traditions are often fabricated.

- Inappropriate standard: common practice – just because everybody’s doing it doesn’t make it true. Appeals to inertia and status quo.Example: most people peel bananas from the “wrong” end.

- Inappropriate standard: moderation/extreme – claims that an argument is true (or false) because it is too extreme (or too moderate). AKA the Fallacy of the Golden Mean.

- Appeal to popularity: bandwagon effect– just because an idea is popular doesn’t make it true. Often found in ads.

- Appeal to popularity: elites – only a few can make the grade or be admitted. Many are called; few are chosen.

- Two wrongs –our competitors have cheated, therefore, it’s acceptable for us to cheat.

- Going off topic: straw man or paper tiger– misrepresenting the other side. Responding to an argument that is not the argument presented.

- Going off topic: red herring – a false scent that leads the argument astray. Person 1: We shouldn’t do that. Person 2: If we don’t do it, someone else will.

- Going off topic: non sequitir – making a statement that doesn’t follow logically from its antecedents. Person 1: We should feed the poor. Person 2: You’re a communist.

- Appeals to emotion– incorporating emotions to assess truth rather than using logic. You say we should kill millions of chickens to stop the avian flu. That’s disgusting.

Chances are that you’ve used some of these fallacies in a debate or argument. Indeed, you may have convinced someone to choose X rather than Y using them. Though these fallacies may be persuasive, it’s useful to remember that they have nothing to do with truth.

What We Don’t Know and Don’t See

It’s hard to think critically when you don’t know what you’re missing. As we think about improving our thinking, we need to account for two things that are so subtle that we don’t fully recognize them:

- Assumptions – we make so many assumptions about the world around us that we can’t possibly keep track of them all. We make assumptions about the way the world works, about who we are and how we fit, and about the right way to do things (the way we’ve always done them). A key tenet of critical thinking is that we should question our own thinking. But it’s hard to question our assumptions if we don’t even realize that we’re making assumptions.

- Sensory filters – our eye is bombarded with millions of images each second. But our brain can only process roughly a dozen images per second. We filter out everything else. We filter out enormous amounts of visual data, but we also filter information that comes through our other senses – sounds, smells, etc. In other words, we’re not getting a complete picture. How can we think critically when we don’t know what we’re not seeing? Additionally, my picture of reality differs from your picture of reality (or anyone else’s for that matter). How can we communicate effectively when we don’t have the same pictures in our heads?

Because of assumptions and filters, we often talk past each other. The world is a confusing place and becomes even more confusing when our perception of what’s “out there” is unique. How can we overcome these effects? We need to consider two sets of questions:

- How can we identify the assumptions were making? – I find that the best method is to compare notes with other people, especially people who differ from me in some way. Perhaps they work in a different industry or come from a different country or belong to a different political party. As we discuss what we perceive, we can start to see our own assumptions. Additionally we can think about our assumptions that have changed over time. Why did we used to assume X but now assume Y? How did we arrive at X in the first place? What’s changed to move us toward Y? Did external reality change or did we change?

- How can we identify what we’re not seeing (or hearing, etc.)? – This is a hard problem to solve. We’ve learned to filter information over our entire lifetimes. We don’t know what we don’t see. Here are two suggestions:

- Make the effort to see something new – let’s say that you drive the same route to work every day. Tomorrow, when you drive the route, make an effort to see something that you’ve never seen before. What is it? Why do you think you missed it before? Does the thing you missed belong to a category? Are you missing the entire category? Here’s an example: I tend not to see houseplants. My wife tends not to see classic cars. Go figure.

- View a scene with a friend or loved one. Write down what you see. Ask the other person to do the same. What are the differences? Why do they exist?

The more we study assumptions and filters, the more attuned we become to their prevalence. When we make a decision, we’ll remember to inquire abut ourselves before we inquire about the world around us. That will lead us to better decisions.

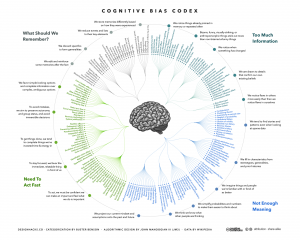

How Many Cognitive Biases Are There?

In my critical thinking class, we begin by studying 17 cognitive biases that are drawn from Peter Facione’s excellent textbook, Think Critically. (I’ve also summarized these here, here, here, and here). I like the way Facione organizes and describes the major biases. His work is very teachable. And 17 is a manageable number of biases to teach and discuss.

While the 17 biases provide a good introduction to the topic, there are more biases that we need to be aware of. For instance, there’s the survivorship bias. Then there’s swimmer’s body fallacy. And the Ikea effect. And the self-herding bias. And don’t forget the fallacy fallacy. How many biases are there in total? Well, it depends on who’s counting and how many hairs we’d like to split. One author says there are 25. Another suggests that there are 53. Whatever the precise number, there are enough cognitive biases that leading consulting firms like McKinsey now have “debiasing” practices to help their clients make better decisions.

The ultimate list of cognitive biases probably comes from Wikipedia, which identifies 104 biases. (Click here and here). Frankly, I think Wikipedia is splitting hairs. But I do like the way Wikipedia organizes the various biases into four major categories. The categorization helps us think about how biases arise and, therefore, how we might overcome them. The four categories are:

1) Biases that arise from too much information – examples include: We notice things already primed in memory. We notice (and remember) vivid or bizarre events. We notice (and attend to) details that confirm our beliefs.

2) Not enough meaning – examples include: We fill in blanks from stereotypes and prior experience. We conclude that things that we’re familiar with are better in some regard than things we’re not familiar with. We calculate risk based on what we remember (and we remember vivid or bizarre events).

3) How we remember – examples include: We reduce events (and memories of events) to the key elements. We edit memories after the fact. We conflate memories that happened at similar times even though in different places or that happened in the same place even though at different times, … or with the same people, etc.

4) The need to act fast – examples include: We favor simple options with more complete information over more complex options with less complete information. Inertia – if we’ve started something, we continue to pursue it rather than changing to a different option.

It’s hard to keep 17 things in mind, much less 104. But we can keep four things in mind. I find that these four categories are useful because, as I make decisions, I can ask myself simple questions, like: “Hmmm, am I suffering from too much information or not enough meaning?” I can remember these categories and carry them with me. The result is often a better decision.

You Too Can Be A Revered Leader!

I just spotted this article on Inc. magazine’s website:

Want to Be A Revered Leader? Here’s How The 25 Most Admired CEOs Win The Hearts of Their Employees.

The article’s subhead is: “America’s 25 most admired CEOs have earned the respect of their people. Here’s how you can too.”

Does this sound familiar? It’s a good example of the survivorship fallacy. (See also here and here). The 25 CEOs selected for the article “survived” a selection process. The author then highlights the common behaviors among the 25 leaders. The implication is that — if you behave the same way — you too will become a revered leader.

Is it true? Well, think about the hundreds of CEOs who didn’t survive the selection process. I suspect that many of the unselected CEOs behave in ways that are similar to the 25 selectees. But the unselected CEOs didn’t become revered leaders. Why not? Hard to say …precisely because we’re not studying them. It’s not at all clear to me that I will become a revered leader if I behave like the 25 selectees. In fact, the reverse my be true — people may think that I’m being inauthentic and lose respect for me.

A better research method would be to select 25 leaders who are “revered” and compare them to 25 leaders who are not “revered”. (Defining what “revered” means will be slippery). By selecting two groups, we have some basis for comparison and contrast. This can often lead to deeper insights.

As it stands, the Inc. article reminds me of the book for teenagers called How To Be Popular. It’s cute but not very meaningful.

Is Critical Thinking The Ultimate Job Skill?

Interesting syllogism. But the premise is unsound. That’s why I get the big bucks.

When I went off to college, my mother told me, “Now remember … you’re going to college to learn how to think. Don’t miss that lesson.”

I wonder what she would say if she were sending me off to college today. It might be more along the lines of, “Now remember … you’re going to college to get a good job. Don’t blow it.”

We can only judge programs and processes based on their goals. If the goal of government is to provide good services at a reasonable cost, we might give it a fairly low grade. However, if the goal of government is to increase employment, then we might evaluate it more positively. The same is true of higher education. So what is the goal of higher education? Is it to teach students how to think? Or is it to provide them skills to get a job?

I would argue that the goal of higher education – indeed of any education – is to improve the students’ ability to think. Good thinking can certainly help you get a job. In fact, it may be the ultimate job skill. But good thinking can take you much farther than a good job. Here’s my thinking on the issue:

1) Thinking is foundational — the essence of running a business (or a government) is to make decisions about the future. To make effective decisions, we need to understand how we think, how our thinking can be biased, and how to evaluate evidence and arguments. If we know everything about finance, for instance, but don’t know how to think effectively, we will make decisions based on faulty evidence, weak arguments, and unconscious biases. By chance, we might still make some good decisions. But we should remember Louis Pasteur’s thought, “Chance favors the prepared mind.”

2) The future is unknowable — our niece, Amelia, will graduate from college next May. If she works until she’s 65, she’ll retire in the year 2060. What skills will employers need in 2060? Who knows? As I reflect on my own education, the content I learned in college is largely useless today. The processes I learned, however, are still very relevant. Thinking is the ultimate process. Amelia will still need to think effectively in 2060.

3) Thinking promotes freedom – if we can’t think for ourselves, we will forever be buffeted by other people’s agendas, desires, ambitions, and rhetorical excesses. Critical thinking allows us to assess ideas and social movements and make effective decisions on our own. We can frame our thinking as we wish and not allow others to create frames for us. We can identify the truth rather than relying on others to tell us what is true. Critical thinking, allows us to take control of our own destiny, which is the essence of freedom. I don’t know of any other discipline that can make the same claim.