What We Don’t Know and Don’t See

It’s hard to think critically when you don’t know what you’re missing. As we think about improving our thinking, we need to account for two things that are so subtle that we don’t fully recognize them:

- Assumptions – we make so many assumptions about the world around us that we can’t possibly keep track of them all. We make assumptions about the way the world works, about who we are and how we fit, and about the right way to do things (the way we’ve always done them). A key tenet of critical thinking is that we should question our own thinking. But it’s hard to question our assumptions if we don’t even realize that we’re making assumptions.

- Sensory filters – our eye is bombarded with millions of images each second. But our brain can only process roughly a dozen images per second. We filter out everything else. We filter out enormous amounts of visual data, but we also filter information that comes through our other senses – sounds, smells, etc. In other words, we’re not getting a complete picture. How can we think critically when we don’t know what we’re not seeing? Additionally, my picture of reality differs from your picture of reality (or anyone else’s for that matter). How can we communicate effectively when we don’t have the same pictures in our heads?

Because of assumptions and filters, we often talk past each other. The world is a confusing place and becomes even more confusing when our perception of what’s “out there” is unique. How can we overcome these effects? We need to consider two sets of questions:

- How can we identify the assumptions were making? – I find that the best method is to compare notes with other people, especially people who differ from me in some way. Perhaps they work in a different industry or come from a different country or belong to a different political party. As we discuss what we perceive, we can start to see our own assumptions. Additionally we can think about our assumptions that have changed over time. Why did we used to assume X but now assume Y? How did we arrive at X in the first place? What’s changed to move us toward Y? Did external reality change or did we change?

- How can we identify what we’re not seeing (or hearing, etc.)? – This is a hard problem to solve. We’ve learned to filter information over our entire lifetimes. We don’t know what we don’t see. Here are two suggestions:

- Make the effort to see something new – let’s say that you drive the same route to work every day. Tomorrow, when you drive the route, make an effort to see something that you’ve never seen before. What is it? Why do you think you missed it before? Does the thing you missed belong to a category? Are you missing the entire category? Here’s an example: I tend not to see houseplants. My wife tends not to see classic cars. Go figure.

- View a scene with a friend or loved one. Write down what you see. Ask the other person to do the same. What are the differences? Why do they exist?

The more we study assumptions and filters, the more attuned we become to their prevalence. When we make a decision, we’ll remember to inquire abut ourselves before we inquire about the world around us. That will lead us to better decisions.

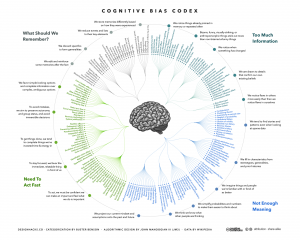

How Many Cognitive Biases Are There?

In my critical thinking class, we begin by studying 17 cognitive biases that are drawn from Peter Facione’s excellent textbook, Think Critically. (I’ve also summarized these here, here, here, and here). I like the way Facione organizes and describes the major biases. His work is very teachable. And 17 is a manageable number of biases to teach and discuss.

While the 17 biases provide a good introduction to the topic, there are more biases that we need to be aware of. For instance, there’s the survivorship bias. Then there’s swimmer’s body fallacy. And the Ikea effect. And the self-herding bias. And don’t forget the fallacy fallacy. How many biases are there in total? Well, it depends on who’s counting and how many hairs we’d like to split. One author says there are 25. Another suggests that there are 53. Whatever the precise number, there are enough cognitive biases that leading consulting firms like McKinsey now have “debiasing” practices to help their clients make better decisions.

The ultimate list of cognitive biases probably comes from Wikipedia, which identifies 104 biases. (Click here and here). Frankly, I think Wikipedia is splitting hairs. But I do like the way Wikipedia organizes the various biases into four major categories. The categorization helps us think about how biases arise and, therefore, how we might overcome them. The four categories are:

1) Biases that arise from too much information – examples include: We notice things already primed in memory. We notice (and remember) vivid or bizarre events. We notice (and attend to) details that confirm our beliefs.

2) Not enough meaning – examples include: We fill in blanks from stereotypes and prior experience. We conclude that things that we’re familiar with are better in some regard than things we’re not familiar with. We calculate risk based on what we remember (and we remember vivid or bizarre events).

3) How we remember – examples include: We reduce events (and memories of events) to the key elements. We edit memories after the fact. We conflate memories that happened at similar times even though in different places or that happened in the same place even though at different times, … or with the same people, etc.

4) The need to act fast – examples include: We favor simple options with more complete information over more complex options with less complete information. Inertia – if we’ve started something, we continue to pursue it rather than changing to a different option.

It’s hard to keep 17 things in mind, much less 104. But we can keep four things in mind. I find that these four categories are useful because, as I make decisions, I can ask myself simple questions, like: “Hmmm, am I suffering from too much information or not enough meaning?” I can remember these categories and carry them with me. The result is often a better decision.

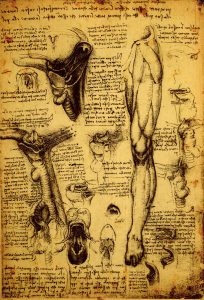

Veni, Vidi, Da Vinci

What made Leonardo da Vinci a genius? I’ve just finished Walter Isaacson’s all-purpose biography of the Italian Renaissance man and four words come to mind: curiosity, observation, analogy, and humility. These four traits combined and recombined throughout Leonardo’s life to create advances in painting, engineering, architecture, anatomy, hydrology, optics, weaponry, and theater.

Leonardo maintained a childlike curiosity throughout his life. Every kid wants to know why the sky is blue. As we reach adulthood, most of us simply accept the fact that the sky is indeed blue. Not Leonardo. He studied the question for much of his adult life and ultimately developed an explanation based on clear evidence and careful reasoning.

Leonardo combined his curiosity with powers of observation that beggar belief. He noticed, for instance, that dragonflies have four wings, two forward and two aft. He wondered how they worked. By close observation, he concluded that when the two forward wings go up, the two aft wings go down. It seems like a simple observation but it must have taken hours of acute and disciplined observation.

Similarly, he took an interest in how birds flap their wings. Do their wings move faster as they flap upward or downward? Most of us would not conceive of such a question, much less focus our attention sufficiently to answer it. But Leonardo did, not once but several times.

His first observations – taken over many hours and days – suggested that birds flap their wings faster on the down stroke. At this point, I would have recorded my observation, concluded that all birds behaved similarly, and moved on. But Leonardo persisted. He observed different bird species and found that some flap faster on the up stroke than the down stroke. Wing behavior differs by species. It’s an interesting observation about birds. It’s a fascinating observation about Leonardo. Arthur Bloch once said that, “A conclusion is the place where you get tired of thinking.” Leonardo, apparently, never got tired of thinking. He recognized that all conclusions are tentative.

Leonardo didn’t just study insects and birds. He studied pretty much everything – from rivers to trees to pumps to blood circulation to optical effects to anatomy to geometry to Latin to … well, whatever caught his eye. His breadth of knowledge allowed him to draw analogies that others would have missed. Some of his analogies seem “obvious” to us today – like the analogy between water flow in rivers and blood flow in humans.

But how about the analogy between arteriosclerosis and oranges? As he dissected cadavers, Leonardo noticed that older people’s arteries were often less flexible and more clogged that those of younger people. He became the first person in history to accurately describe arteriosclerosis. He did so by comparing it to the rind of an orange that dries, stiffens, and thickens as it ages.

In today’s world, expertise is often associated with narrowness. Experts gain ever more knowledge about ever-narrower subjects. Leonardo was certainly an expert in several narrow domains. But he also saw across domains and could think outside the frame. Philip Tetlock, an authority on political judgment, suggests that this ability to think across boundaries is a key ingredient to insight and discovery. In many ways, Leonardo thought more like a fox and less like a hedgehog.

If I could think and observe like Leonardo, I might become a bit of a prima donna. But Leonardo never did. He remained humble and diffident in an ever-changing world. As Isaacson notes, he “… relished a world in flux.” Further, “When he came up with an idea, he devised an experiment to test it. And when his experience showed that a theory was flawed … he abandoned his theory and sought a new one.” By revising and updating his own conclusions, Leonardo helped establish a world in which observation and experience trump dogma and received wisdom.

Leonardo’s legacy is so broad and so varied that it’s difficult to encapsulate succinctly. But Isaacson includes a quote the German philosopher, Schopenhauer, that helps us understand his impact: “Talent hits a target that no one else can hit. Genius hits a target that no one else can see.”

Are Machines Better Than Judges?

Police make about 10 million arrests every year in the United States. In many cases, a judge must then make a jail or bail decision. Should the person be jailed until the trial or can he or she be released on bail? The judge considers several factors and predicts how the person will behave. There are several relevant outcomes if the person is released:

- He or she will show up for trial and will not commit a crime in the interim.

- He or she will commit a crime prior to the trial.

- He or she will not show up for the trial.

A person in Category 1 should be released. People in Categories 2 and 3 should be jailed. Two possible error types exist:

Type 1 – a person who should be released is jailed.

Type 2 – a person who should be jailed is released.

Jail, bail, and criminal records are public information and researchers can massively aggregate them. Jon Kleinberg, a professor of computer science at Cornell, and his colleagues did exactly that and produced a National Bureau of Economic Research Working Paper earlier this year.

Kleinberg and his colleagues asked an intriguing question: Could a machine-learning algorithm, using the same information available to judges, reach different decisions than the human judges and reduce either Type 1 or Type 2 errors or both?

The simple answer: yes, a machine can do better.

Klein and his colleagues first studied 758,027 defendants arrested in New York City between 2008 and 2013. The researchers developed an algorithm and used it to decide which defendants should be jailed and which should be bailed. There are several different questions here:

- Would the algorithm make different decisions than the judges?

- Would the decisions provide societal benefits by either:

- Reducing crimes committed by people who were erroneously released?

- Reducing the number of people held in jails unnecessarily?

The answer to the first question is very clear: the algorithm produced decisions that varied in important ways from those that the judges actually made.

The algorithm also produced significant societal benefits. If we wanted to hold the crime rate the same, we need only have jailed 48.2% of the people who were actually jailed. In other words, 51.8% of those jailed could have been released without committing additional crimes. On the other hand, if we kept the number of people in jail the same – but changed the mix of who was jailed and who was bailed – the algorithm could reduce the number of crimes committed by those on bail by 75.8%.

The researchers replicated the study using nationwide data on 151,461 felons arrested between 1990 and 2009 in 40 urban counties scattered around the country. For this dataset, “… the algorithm could reduce crime by 18.8% holding the release rate constant, or holding the crime rate constant, the algorithm could jail 24.5% fewer people.”

Given the variables examined, the algorithm appears to make better decisions, with better societal outcomes. But what if the judges are acting on other variables as well? What if, for instance, the judges are considering racial information and aiming to reduce racial inequality? The algorithm would not be as attractive if it reduced crime but also exacerbated racial inequality. The researchers studied this possibility and found that the algorithm actually produces better racial equity. Most observers would consider this an additional societal benefit.

Similarly, the judges may have aimed to reduce specific types of crime – like murder or rape – while de-emphasizing less violent crime. Perhaps the algorithm reduces overall crime but increases violent crime. The researchers probed this question and, again, the results were negative. The algorithm did a better job of reducing all crimes, including very violent crimes.

What’s it all mean? For very structured predictions with clearly defined outcomes, an algorithm produced by machine learning can produce decisions that reduce both Type I and Type II errors as compared to decisions made by human judges.

Does this mean that machine algorithms are better than human judges? At this point, all we can say is that algorithms produce better results only when judges make predictions in very bounded circumstances. As the researchers point out, most decisions that judges make do not fit this description. For instance, judges regularly make sentencing decisions, which are far less clear-cut than bail decisions. To date, machine-learning algorithms are not sufficient to improve on these kinds of decisions.

(This article is based on NBER Working Paper 23180, “Human Decisions and Machine Predictions”, published in February 2017. The working paper is available here and here. It is copyrighted by its authors, Jon Kleinberg, Himabindu Lakkaraju, Jure Lesovec, Jens Ludwig, and Sendhil Mullainathan. The paper was also published, in somewhat modified form, as “Human Decisions and Machine Predictions” in The Quarterly Journal Of Economics on 26 August 2017. The paper is behind a pay wall but the abstract is available here).

The Dying Grandmother Gambit

I teach two classes at the University of Denver: Applied Critical Thinking and Persuasion Methods and Techniques. Sometimes I use the same teaching example for both classes. Take the dying grandmother gambit, for instance

In this persuasive gambit, the speaker plays on our heartstrings by telling a very sad story about a dying grandmother (or some other close relative). The speaker aims to gain our agreement and encourages us to act. Notice that thinking is not required. In fact, it’s discouraged. The story often goes like this:

My grandmother was the salt of the earth. She worked hard her entire life. She raised good kids and played by the rules. She never complained; she just worked harder. She worked her fingers to the bone but she was always the picture of health … until her dying days when our government simply abandoned her. As her health failed, she moved into a nursing home. She wanted to stay. She thought she had earned it. But the government did X (or didn’t do Y). As a result, my dying grandmother was abandoned to her fate. She was kicked to the curb like an old soda can. In her last days, she was a tiny, wrinkled prune. She couldn’t hear or see. She just curled up in her bed and waited to die. But our faceless bureaucrats couldn’t have cared less. My grandmother never complained. That was not her way. But she cried. Oh lord, did she cry. I can still see the big salty tears rolling slowly down her cheeks. Sometimes her gown was soaked with tears. How much did the government care? Not a whit. It would have been so easy for the government to change its policy. They could have cancelled X (or done Y). But no, they let her die. Folks, I don’t want your grandparents to die this way. So I’ve dedicated my candidacy to changing the government policy. If I can save just one grandma from the same fate, I’ll consider my job done.

So, do I tell my classes this is a good thing or a bad thing? It depends on which class I’m teaching.

In my critical thinking class I point out the weakness of the evidence. It doesn’t make sense to decide government policy on a sample of one. Perhaps the grandmother represents a broader population. Or perhaps not. We have no way of knowing how representative her story is.

Further, we didn’t meet the dear old lady. We didn’t directly and dispassionately observe her conditions. We didn’t speak to her caretakers. Or to those faceless bureaucrats. We only heard the story and we heard it from a person who stands to benefit from our reaction. She may well have embroidered or embellished the story.

Further, the speaker is playing on the vividness fallacy. We remember vivid things, especially things that result in loss, or death, or dismemberment. Because we remember them, we overestimate their probability. We think they’re far more likely to happen than they really are. If we invoke our critical thinking skills, we may recognize this. But the speaker aims to drown our thinking in a flood of emotions.

In my critical thinking class, I point out the hazards of succumbing to the story. In my persuasion class, on the other hand, I suggest that it’s a very good way to influence people.

The dying grandmother is a vivid and emotional story. It flies below our System 2 radar and aims directly at our System 1. It aims to influence us emotionally, not conceptually. It’s influential because it’s a good story. A story can do what data can never do. It can engage us and enrage us.

Further, the dying grandmother puts a very effective face on the issue. The issue is no longer about numbers. It’s about flesh and blood. We would be very hardhearted to ignore it. So we don’t ignore it. Instead, our emotions pull us closer to the speaker’s position.

So is the dying grandmother gambit good or bad? It’s neither. It just is. We need to recognize when someone manipulates our emotions. Then we need to put on our critical thinking caps.