That’s Irrelevant!

In her book, Critical Thinking: An Appeal To Reason, Peg Tittle has an interesting and useful way of organizing 15 logical fallacies. Simply put, they’re all irrelevant to the assessment of whether an argument is true or not. Using Tittle’s guidelines, we can quickly sort out what we need to pay attention to and what we can safely ignore.

In her book, Critical Thinking: An Appeal To Reason, Peg Tittle has an interesting and useful way of organizing 15 logical fallacies. Simply put, they’re all irrelevant to the assessment of whether an argument is true or not. Using Tittle’s guidelines, we can quickly sort out what we need to pay attention to and what we can safely ignore.

Though these fallacies are irrelevant to truth, they are very relevant to persuasion. Critical thinking is about discovering the truth; it’s about the present and the past. Persuasion is about the future, where truth has yet to be established. Critical thinking helps us decide what we can be certain of. Persuasion helps us make good choices when we’re uncertain. Critical thinking is about truth; persuasion is about choice. What’s poison to one is often catnip to the other.

With that thought in mind, let’s take a look at Tittle’s 15 irrelevant fallacies. If someone tosses one of these at you in a debate, your response is simple: “That’s irrelevant.”

- Ad hominem: character– the person who created the argument is evil; therefore the argument is unacceptable. (Or the reverse).

- Ad hominem: tu quoque– the person making the argument doesn’t practice what she preaches. You say, it’s wrong to hunt but you eat meat.

- Ad hominem: poisoning the well— the person making the argument has something to gain. He’s not disinterested. Before you listen to my opponent, I would like to remind you that he stands to profit handsomely if you approve this proposal.

- Genetic fallacy– considering the origin, not the argument. The idea came to you in a dream. That’s not acceptable.

- Inappropriate standard: authority– An authority may claim to be an expert but experts are biased in predictable ways.

- Inappropriate standard: tradition – we accept something because we have traditionally accepted it. But traditions are often fabricated.

- Inappropriate standard: common practice – just because everybody’s doing it doesn’t make it true. Appeals to inertia and status quo.Example: most people peel bananas from the “wrong” end.

- Inappropriate standard: moderation/extreme – claims that an argument is true (or false) because it is too extreme (or too moderate). AKA the Fallacy of the Golden Mean.

- Appeal to popularity: bandwagon effect– just because an idea is popular doesn’t make it true. Often found in ads.

- Appeal to popularity: elites – only a few can make the grade or be admitted. Many are called; few are chosen.

- Two wrongs –our competitors have cheated, therefore, it’s acceptable for us to cheat.

- Going off topic: straw man or paper tiger– misrepresenting the other side. Responding to an argument that is not the argument presented.

- Going off topic: red herring – a false scent that leads the argument astray. Person 1: We shouldn’t do that. Person 2: If we don’t do it, someone else will.

- Going off topic: non sequitir – making a statement that doesn’t follow logically from its antecedents. Person 1: We should feed the poor. Person 2: You’re a communist.

- Appeals to emotion– incorporating emotions to assess truth rather than using logic. You say we should kill millions of chickens to stop the avian flu. That’s disgusting.

Chances are that you’ve used some of these fallacies in a debate or argument. Indeed, you may have convinced someone to choose X rather than Y using them. Though these fallacies may be persuasive, it’s useful to remember that they have nothing to do with truth.

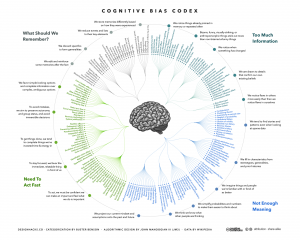

How Many Cognitive Biases Are There?

In my critical thinking class, we begin by studying 17 cognitive biases that are drawn from Peter Facione’s excellent textbook, Think Critically. (I’ve also summarized these here, here, here, and here). I like the way Facione organizes and describes the major biases. His work is very teachable. And 17 is a manageable number of biases to teach and discuss.

While the 17 biases provide a good introduction to the topic, there are more biases that we need to be aware of. For instance, there’s the survivorship bias. Then there’s swimmer’s body fallacy. And the Ikea effect. And the self-herding bias. And don’t forget the fallacy fallacy. How many biases are there in total? Well, it depends on who’s counting and how many hairs we’d like to split. One author says there are 25. Another suggests that there are 53. Whatever the precise number, there are enough cognitive biases that leading consulting firms like McKinsey now have “debiasing” practices to help their clients make better decisions.

The ultimate list of cognitive biases probably comes from Wikipedia, which identifies 104 biases. (Click here and here). Frankly, I think Wikipedia is splitting hairs. But I do like the way Wikipedia organizes the various biases into four major categories. The categorization helps us think about how biases arise and, therefore, how we might overcome them. The four categories are:

1) Biases that arise from too much information – examples include: We notice things already primed in memory. We notice (and remember) vivid or bizarre events. We notice (and attend to) details that confirm our beliefs.

2) Not enough meaning – examples include: We fill in blanks from stereotypes and prior experience. We conclude that things that we’re familiar with are better in some regard than things we’re not familiar with. We calculate risk based on what we remember (and we remember vivid or bizarre events).

3) How we remember – examples include: We reduce events (and memories of events) to the key elements. We edit memories after the fact. We conflate memories that happened at similar times even though in different places or that happened in the same place even though at different times, … or with the same people, etc.

4) The need to act fast – examples include: We favor simple options with more complete information over more complex options with less complete information. Inertia – if we’ve started something, we continue to pursue it rather than changing to a different option.

It’s hard to keep 17 things in mind, much less 104. But we can keep four things in mind. I find that these four categories are useful because, as I make decisions, I can ask myself simple questions, like: “Hmmm, am I suffering from too much information or not enough meaning?” I can remember these categories and carry them with me. The result is often a better decision.

You Too Can Be A Revered Leader!

I just spotted this article on Inc. magazine’s website:

Want to Be A Revered Leader? Here’s How The 25 Most Admired CEOs Win The Hearts of Their Employees.

The article’s subhead is: “America’s 25 most admired CEOs have earned the respect of their people. Here’s how you can too.”

Does this sound familiar? It’s a good example of the survivorship fallacy. (See also here and here). The 25 CEOs selected for the article “survived” a selection process. The author then highlights the common behaviors among the 25 leaders. The implication is that — if you behave the same way — you too will become a revered leader.

Is it true? Well, think about the hundreds of CEOs who didn’t survive the selection process. I suspect that many of the unselected CEOs behave in ways that are similar to the 25 selectees. But the unselected CEOs didn’t become revered leaders. Why not? Hard to say …precisely because we’re not studying them. It’s not at all clear to me that I will become a revered leader if I behave like the 25 selectees. In fact, the reverse my be true — people may think that I’m being inauthentic and lose respect for me.

A better research method would be to select 25 leaders who are “revered” and compare them to 25 leaders who are not “revered”. (Defining what “revered” means will be slippery). By selecting two groups, we have some basis for comparison and contrast. This can often lead to deeper insights.

As it stands, the Inc. article reminds me of the book for teenagers called How To Be Popular. It’s cute but not very meaningful.

Surviving The Survivorship Bias

You too can be popular.

Here are three articles from respected sources that describe the common traits of innovative companies:

The 10 Things Innovative Companies Do To Stay On Top (Business Insider)

The World’s 10 Most Innovative Companies And How They Do It (Forbes)

Five Ways To Make Your Company More Innovative (Harvard Business School)

The purpose of these articles – as Forbes puts it – is to answer a simple question: “…what makes the difference for successful innovators?” It’s an interesting question and one that I would dearly love to answer clearly.

The implication is that, if your company studies these innovative companies and implements similar practices, well, then … your company will be innovative, too. It’s a nice idea. It’s also completely erroneous.

How do we know the reasoning is erroneous? Because it suffers from the survivorship fallacy. (For a primer on survivorship, click here and here). The companies in these articles are picked because they are viewed as the most innovative or most successful or most progressive or most something. They “survive” the selection process. We study them and abstract out their common practices. We assume that these common practices cause them to be more innovative or successful or whatever.

The fallacy comes from an unbalanced sample. We only study the companies that survive the selection process. There may well be dozens of other companies that use similar practices but don’t get similar results. They do the same things as the innovative companies but they don’t become innovators. Since we only study survivors, we have no basis for comparison. We can’t demonstrate cause and effect. We can’t say how much the common practices actually contribute to innovation. It may be nothing. We just don’t know.

Some years ago, I found a book called How To Be Popular at a used-book store. Written for teenagers, it tells you what the popular kids do. If you do the same things, you too can be popular. It’s cute and fluffy and meaningless. In fact, to someone beyond adolescence, it’s obvious that it’s meaningless. Doing what the popular kids do doesn’t necessarily make your popular. We all know that.

I bought the book as a keepsake and a reminder that the survivorship fallacy can pop up at any moment. It’s obvious when it appears in a fluffy book written for teens. It’s less obvious when it appears in a prestigious business journal. But it’s still a fallacy.

Business School And The Swimmer’s Body Fallacy

He’s tall because he plays basketball.

Michael Phelps is a swimmer. He has a great body. Ian Thorpe is a swimmer. He has a great body. Missy Franklin is a swimmer. She has a great body.

If you look at enough swimmers, you might conclude that swimming produces great bodies. If you want to develop a great body, you might decide to take up swimming. After all, great swimmers develop great bodies.

Swimming might help you tone up and trim down. But you would also be committing a logical fallacy. Known as the swimmer’s body fallacy, it confuses selection criteria with results.

We may think that swimming produces great bodies. But, in fact, it’s more likely that great bodies produce top swimmers. People with great bodies for swimming – like Ian Thorpe’s size 17 feet – are selected for competitive swimming programs. Once again, we’re confusing cause and effect. (Click here for a good background article on swimmer’s body fallacy).

Here’s another way to look at it. We all know that basketball players are tall. But would you accept the proposition that playing basketball makes you tall? Probably not. Height is not malleable. People grow to a given height because of genetics and diet, not because of the sports they play.

When we discuss height and basketball, the relationship is obvious. Tallness is a selection criterion for entering basketball. It’s not the result of playing basketball. But in other areas, it’s more difficult to disentangle selection factors from results. Take business school, for instance.

In fact, let’s take Harvard Business School or HBS. We know that graduates of HBS are often highly successful in the worlds of business, commerce, and politics. Is that success due to selection criteria or to the added value of HBS’s educational program?

HBS is well known for pioneering the case study method of business education. Students look at successful (and unsuccessful) businesses and try to ferret out the causes. Yet we know that, in evidence-based medicine, case studies are considered to be very weak evidence.

According to medical researchers, a case study is Level 3 evidence on a scale of 1 to 4, where 4 is the weakest. Why is it so weak? Partially because it’s a sample of one.

It’s also because of the survivorship bias. Let’s say that Company A has implemented processes X, Y, and Z and been wildly successful. We might infer that practices X, Y, and Z caused the success. Yet there are probably dozens of other companies that also implemented processes X, Y, and Z and weren’t so successful. Those companies, however, didn’t “survive” the process of being selected for a B-school case study. We don’t account for them in our reasoning.

(The survivorship bias is sometimes known as the LeBron James fallacy. Just because you train like LeBron James doesn’t mean that you’ll play like him).

So we have some reasons to suspect the logical underpinnings of a case-base education method. So, let’s revisit the question: Is the success of HBS graduates due to selection criteria or to the results of the HBS educational program? HBS is filled with brilliant professors who conduct great research and write insightful papers and books. They should have some impact on students, even if they use weak evidence in their curriculum. Shouldn’t they? Being a teacher, I certainly hope so. If so, then the success of HBS graduates is at least partially a result of the educational program, not just the selection criteria.

But I wonder …