Debiasing and Corporate Performance

Over the past several years, I’ve written several articles about cognitive biases. I hope I have alerted my readers to the causes and consequences of these biases. My general approach is simple: forewarned is forearmed.

I didn’t realize that I was participating in a more general trend known as debiasing. As Wikipedia notes, “Debiasing is the reduction of bias, particularly with respect to judgment and decision making.” The basic idea is that we can change things to help people and organizations make better decisions.

What can we change? According to A User’s Guide To Debiasing, we can do two things:

- Modify the decision maker – we do this by “providing some combination of knowledge and tools to help [people] overcome their limitations and dispositions.”

- Modify the environment – we do this by “alter[ing] the setting where judgments are made in a way that … encourages better strategies.”

I’ve been using a Type 1 approach. I’ve aimed at modifying the decision maker by providing information about the source of biases and describing how they skew our perception of reality. We often aren’t aware of the nature of our own perception and judgment. I liken my approach to making the fish aware of the water they’re swimming in. (To review some of my articles in this domain, click here, here, here, and here).

What does a Type 2 approach look like? How do we modify the environment? The general domain is called choice architecture. The idea is that we change the process by which the decision is made. The book Nudge by Richard Thaler and Cass Sunstein is often cited as an exemplar of this type of work. (My article on using a courtroom process to make corporate decisions fits in the same vein).

How important is debiasing in the corporate world? In 2013, McKinsey & Company surveyed 770 corporate board members to determine the characteristics of a high-performing board. The “biggest aspiration” of high-impact boards was “reducing decision biases”. As McKinsey notes, “At the highest level, boards look inward and aspire to more ‘meta’ practices—deliberating about their own processes, for example—to remove biases from decisions.”

More recently, McKinsey has written about the business opportunity in debiasing. They note, for instance, that businesses are least likely to question their core processes. Indeed, they may not even recognize that they are making decisions. In my terminology, they’re not aware of the water they’re swimming in. As a result, McKinsey concludes “…most of the potential bottom-line impact from debiasing remains unaddressed.”

What to do? Being a teacher, I would naturally recommend training and education programs as a first step. McKinsey agrees … but only up to a point. McKinsey notes that many decision biases are so deeply embedded that managers don’t recognize them. They swim blithely along without recognizing how the water shapes and distorts their perception. Or, perhaps more frequently, they conclude, “I’m OK. You’re Biased.”

Precisely because such biases frequently operate in System 1 as opposed to System 2, McKinsey suggests a program consisting of both training and structural changes. In other words, we need to modify both the decision maker and the decision environment. I’ll write more about structural changes in the coming weeks. In the meantime, if you’d like a training program, give me a call.

Failure Is An Option

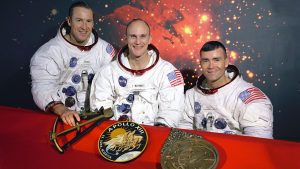

The movie Apollo 13 came out in 1995 and popularized the phrase “Failure is not an option”. The flight director, Gene Kranz (played by Ed Harris), repeated the phrase to motivate engineers to find a solution immediately. It worked.

I bet that Kranz’s signature phrase caused more failures in American organizations than any other single sentence in business history. I know it caused myriad failures – and a culture of fear – in my company.

Our CEO loved to spout phrases like “Failure is not an option” and “We will not accept failure here.” It made him feel good. He seemed to believe that repeating the mantra could banish failure forever. It became a magical incantation.

Of course, we continued to have failures in our company. We built complicated software and we occasionally ran off the rails. What did we do when a failure occurred? We buried it. Better a burial than a “public hanging”.

The CEO’s mantra created a perverse incentive. He wanted to eliminate failures. We wanted to keep our jobs. To keep our jobs, we had to bury our failures. Because we buried them, we never fixed the processes that led to the failures in the first place. Our executives could easily conclude that our processes were just fine. After all, we didn’t have any failures, did we?

As we’ve learned elsewhere, design thinking is all about improving something and then improving it again and then again and again. How can we design a corporate culture that continuously improves?

One answer is the concept of the just culture. A just culture acknowledges that failures occur. Many failures result from systemic or process problems rather than from individual negligence. It’s not the person; it’s the system. A just culture aims to improve the system to 1) prevent failure wherever possible or; 2) to ameliorate failures when they do occur. In a sense, it’s a culture designed to improve itself.

According to Barbara Brunt, “A just culture recognizes that individual practitioners should not be held accountable for system failings over which they have no control.” Rather than hiding system failures, a just culture encourages employees to report them. Designers can then improve the systems and processes. As the system improves, the culture also improves. Employees realize that reporting failures leads to good outcomes, not bad ones. It’s a virtuous circle.

The concept of a just culture is not unlike appreciative inquiry. Managers recognize that most processes work pretty well. They appreciate the successes. Failure is an exception – it’s a cause for action and design thinking as opposed to retribution. We continue to appreciate the employee as we redesign the process.

The just culture concept has established a firm beachhead among hospitals in the United States. That makes sense because hospital mistakes can be especially tragic. But I wonder if the concept shouldn’t spread to a much wider swath of companies and agencies. I can certainly think of a number of software companies that could improve their quality by improving their culture. Ultimately, I suspect that every organization could benefit by adapting a simple principle of just culture: if you want to improve your outcomes, recruit your employees to help you.

I’ve learned a bit about just culture because one of my former colleagues, Kim Ross, recently joined Outcome Engenuity, the leading consulting agency in the field of just culture. You can read more about them here. You can learn more about hospital use of just culture by clicking here, here, and here.

Too Much Rhetoric? Or Not Enough?

In the Western world, the art of persuasion (aka rhetoric), appeared first in ancient Athens. We might well ask, why did it emerge there and then, as opposed to another place and another time?

In his book, Words Like Loaded Pistols, Sam Leith argues that rhetoric blossomed first in Greece because that’s where democracy emerged. Prior to that, we didn’t need to argue or persuade or create ideas — at least not in the public sphere. We just accepted as true whatever the monarch said was true. There was no point in arguing. The monarch wasn’t going to budge.

Because Greeks allowed citizens from different walks of life to speak in the public forum, they were the first people who needed to manage ideas and arguments. In response, they developed the key concepts of rhetoric. They also established the idea that rhetoric was an essential element of good leadership. A leader needed to manage the passions of the moment by speaking logically, clearly, and persuasively.

Through the 19th century, well-educated people were thoroughly schooled in rhetoric as well as the related disciplines of logic and grammar. These were known as the trivium and they helped us manage public ideas. Debates, governed by the rules of rhetoric, helped us create new ideas. Thesis led to antithesis led to synthesis. We considered the trivium to be an essential foundation for good leadership. Leaders have to create ideas, explain ideas, and defend ideas. The trivium provided the tools.

Then in the 20th century, we decided that we didn’t need to teach these skills anymore. Leith argues that we came to see history as an impersonal, overwhelming, uncontrollable force in its own right. Why argue about it if we can’t control it? Courses in rhetoric — and leadership — withered away.

It’s interesting to look at rhetoric as an essential part of democracy. It’s not something to be scorned. It’s something to be promoted. I wonder if some of our partisan anger and divisiveness doesn’t result from the lack of rhetoric in our society. We don’t have too much rhetoric. Rather, we have too little. We have forgotten how to argue without anger.

I’m happy to see that rhetoric and persuasion classes are making a comeback in academia today. Similarly, courses in leadership seem to be flowering again. Perhaps we can look forward to using disagreements to create new ideas rather than an anvil to destroy them.

Innovating The Innovations

Mashup thinking is an excellent way to develop new ideas and products. Rather than thinking outside the box (always difficult), you select ideas from multiple boxes and mash them together. Sometimes, nothing special happens. Sometimes, you get a genius idea.

Let’s mash up self-driving vehicles and drones to see what we get. First, let’s look at the current paradigms:

Self-driving vehicles (SDVs) include cars and trucks equipped with special sensors that can use existing public roadways to navigate autonomously to a given destination. The vehicles navigate a two-dimensional surface and should be able to get humans or packages from Point A to Point B more safely than human-driven vehicles. Individuals may not buy SDVs the way we have traditionally bought cars and trucks. We may simply call them when needed. Though the technology is rapidly improving, the legal and ethical systems still require a great deal of work.

Drones navigate three-dimensional space and are not autonomous. Rather, specially trained pilots fly them remotely. (They are often referred to as Remotely Piloted Aircraft or RPAs). They military uses drones for several missions, including surveillance, intelligence gathering, and to attack ground targets. To date, we haven’t heard of drones attacking airborne targets, but it’s certainly possible. Increasingly, businesses are considering drones for package delivery. The general paradigm is that a small drone will pick up a package from a warehouse (perhaps an airborne warehouse) and deliver it to a home or office or to troops in the field.

So, what do we get if we mash up self-driving vehicles and drones?

The first idea that comes to mind is an autonomous drone. Navigating 3D space is actually simpler than navigating 2D space – you can fly over or under an approaching object. (As a result, train traffic controllers have a more difficult job than air traffic controllers). Why would we want self-flying drones? Conceivably they would be more efficient, less costly, and safer than the human-driven equivalents. They also have a lot more space to operate in and don’t require a lot of asphalt.

We could also change the paradigm for what drones carry. Today, we think of them as carrying packages. Why not people, just like SDVs? It shouldn’t be terribly hard to design a drone that could comfortably carry a couple from their house to the theater and back. We’ll be able to whip out our smart phones, call Uber or Lyft, and have a drone pick us up. (I hope Lyft has trademarked the term Air Lyft).

What else? How about combining self-flying drones with self-driving vehicles? Today’s paradigm for drone deliveries is that an individual drone goes to a warehouse, picks up a package, and delivers it to an individual address. Even if the warehouse is airborne and mobile, that’s horribly inefficient. Instead, let’s try this: a self-driving truck picks up hundreds of packages to be delivered along a given route. The truck also has dozens of drones on it. As the truck passes near an address, a drone picks up the right package, and flies it to the doorstep. We could only do this, of course, if drones are autonomous. The task is too complicated for a human operator.

I could go on … but let’s also investigate the knock-on effects. If what I’ve described comes to pass, what else will happen? Here are some challenges that will probably come up:

- If drones can carry people as well as packages, we’ll need fewer roadways. What will we do with obsolete roads? We’ll probably need fewer airports, too. What will we do with them?

- If people no longer buy personal vehicles but call transportation on demand:

- We’ll need far fewer parking lots. How can cities use the space to revitalize themselves?

- Automobile companies will implode. How do we retrain automobile executives and workers?

- We’ll burn far less fossil fuel. This will be good for the environment but bad for, say, oil companies and oil workers. How do we share the burden?

- If combined vehicles – drones and SDVs – deliver packages, millions of warehouse workers and drivers will lose their jobs. Again, how do we share the burden?

- If autonomous drones can attack airborne targets, do we really need expensive, human-piloted fighter jets?

These are intriguing predictions as well as troublesome challenges. But the thought process for generating these ideas is quite simple – you simply mash up good ideas from multiple boxes. You, too, can predict the future.

The Cost of Bad Writing

My students know that I’m a stickler for good writing. When they ask me why I’m so picky, my answer usually boils down to something that’s logically akin to, “Because I said so.”

I know that the ability to write effectively has helped my career. But is it really so important in today’s world of instant communications? Only if you want to save $400 billion a year.

Josh Bernoff, the owner of WOBS LLC, recently published his survey of 547 business professionals who write “at least two hours per week for work, excluding e-mail”. Bernoff’s findings make a clear and compelling case for teaching – and mastering — effective writing skills. His key findings:

- Reading and writing is a full-time job. Bernoff’s respondents – most of whom were not full-time editors or writers – spend about 25.5 hours reading and 20.4 hours writing each week.

- Though we complain about e-mail, it takes up only about a third of our reading and writing time. We spend far more time writing and reading memos, blog posts, web content, press releases, speeches, and so on.

- We think we’re pretty good; everybody else sucks. On an effectiveness scale of 1 to 10 (with 10 being totally effective), professionals rate their own writing at 6.9. They rate writing prepared by others at 5.4.

- We agree on the Big 5. A majority of respondents thought the following issues contributed most to making written content “significantly less effective”: 1) too long; 2) poorly organized; 3) unclear; 4) too much jargon; 5) not precise enough.

- We waste a lot of time. For all respondents, 81% agreed or strongly agreed with the statement “Poorly written material wastes a lot of my time”. For managers, directors, and supervisors, the figure is 84%.

- We want feedback but have a hard time getting it. Only 49% of all respondents – and 41% of managers, directors, and supervisors – agree with the statement, “I get the feedback I need to make my writing better.”

- Professionals want to write better but are constrained by jargon, passive voice, corporate bullshit and “…the number of people who still think writing is more about making them sound important …” than communicating clearly.

Bernoff rolls all the numbers together and concludes that, “…America is spending 6 percent of total wages on time wasted attempting to get meaning out of poorly written material.” The total cost? About $400 billion.

(You can find Bernoff’s white paper and infographics here. Brief summaries in the popular press also appear here and here).

Bernoff calculates the cost of wasted time. But what’s the direct cost? How much do we spend teaching our employees to write well? Bernoff doesn’t address this specifically but I found a College Board survey from 2004 that digs into the question. The survey went to 120 American companies associated with the College Board’s Business Roundtable. The result? American companies – excluding government agencies and nonprofits – spend about $3.1 billion annually “remedying deficiencies in writing”.

The College Board study also cites an April 2003 white paper titled, “The Neglected ‘R’: The Need For a Writing Revolution.” The conclusion of that study was simple: “Writing today is not a frill for the few, but an essential skill for the many.”

In 2006, The Conference Board picked up a similar theme in a report that asked a simple question: “Are They Ready To Work?” The survey asked companies about the most important skills that newly minted graduates should have. It then asked respondents to grade the skills of newly hired employees. Graduates of two- and four-year college programs were rated “deficient” in three areas: 1) Written communications, and 2) Writing in English; 3) Leadership.

Business leaders agree that writing is an important skill. We can cite studies going back more than a decade that suggest we’re doing a poor job teaching the skill. Bernoff’s study suggests we’re not doing any better today – in fact, we may be doing worse. What to do? We need to invest more time, energy, and effort teaching the “neglected R”. Or you could just hire me.