Prospero’s Precepts – Thinking About Thnking

Really?

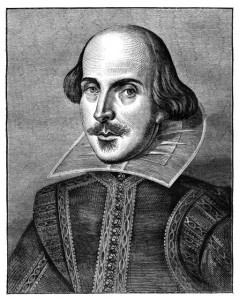

Did Shakespeare really write Shakespeare? It’s a question that’s been analyzed many times – mainly by historians and literary critics. But Peter Sturrock, a professor of physics at Stanford, recently took “A Scientific Approach to the Authorship Question.”

In his book, AKA Shakespeare, Sturrock uses probability, logic, Bayesian statistics, and good old-fashioned critical thinking to revisit the question. Sturrock argues that the real author of the Shakespearean plays could have been one of three different people. He uses a scientific, rationalist method and fashions a conversation between multiple observers, each with his own perspective.

For Shakespeare buffs, this is catnip. But even if you’re not caught up in the intrigues of the Elizabethan era, Sturrock provides a fascinating look at how to think about complex and fractured issues.

Sturrock also collects and organizes 11 key insights into critical thinking that he calls Prospero’s Precepts. These form the intellectual foundation for his inquiry into the authorship question. For me, the list itself is catnip, and worth the entire cost of the book. Here are the Precepts. I hope you enjoy them.

All beliefs in whatever realm are theories at some level. (Stephen Schneider)

Do not condemn the judgment of another because it differs from your own. You may both be wrong. (Dandemis)

Read not to contradict and confute; nor to believe and take for granted; nor to find talk and discourse; but to weigh and consider. (Francis Bacon)

Never fall in love with your hypothesis. (Peter Medawar)

It is a capital mistake to theorize before one has data. Insensibly one begins to twist facts to suit theories instead of theories to suit facts. (Arthur Conan Doyle)

A theory should not attempt to explain all the facts, because some of the facts are wrong. (Francis Crick)

The thing that doesn’t fit is the thing that is most interesting. (Richard Feynman)

To kill an error is as good a service as, and sometimes even better than, the establishing of a new truth or fact. (Charles Darwin)

It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so. (Mark Twain)

Ignorance is preferable to error; and he is less remote from the truth who believes nothing, than he who believes what is wrong. (Thomas Jefferson)

All truth passes through three stages. First, it is ridiculed, second, it is violently opposed, and third, it is accepted as self-evident. (Arthur Schopenhauer)

By the way, I first discovered Sturrock’s book and the Precepts on Maria Popova’s excellent website, Brainpickings.

Inference, Frequentism, and Sex

Statistically significant.

I understand inferential statistics reasonably well but I’m a rookie at Bayesian statistics. What’s the difference? Well, I’m glad you asked.

Inferential statistics allow us to infer some conclusion. Let’s say we want to study a hypothesis that Scientific American put forth last year: Men who do more housework have less sex. That’s an interesting thought and one that men – especially married men – might want to investigate.

Where do we start? First, we need to define the population. Let’s say we’re only interested in men in America. So, the hypothesis becomes:

Men in America who do more housework have less sex.

Now, all we have to do is interview all the men in America about the chores they do and how often they have sex. There are roughly 147 million men in America so this is going to take some time. Indeed, it will take so long that our attitudes toward sex and housework might change in the interim. The study would be useless.

We need a faster way. We might take a random sample of men and interview them. We gather the data and find that the hypothesis is true in the sample. The men we interviewed who did more chores also had less sex.

Now we have to ask ourselves, what’s the probability that the sample accurately represents the population? We found an inverse correlation (more housework, less sex) in the sample. Can we infer that this is also true in the population?

To do this, we need to play with probabilities. We randomly selected the men in the sample, which means the sample probably represents the population (but maybe not). Generally speaking, larger random samples are more likely to accurately represent the population.

We can also calculate the probability that the correlation found in the sample also exists in the population. By general agreement, if it’s more than 95% probable (less than 5% improbable), we declare that the finding is statistically significant. In other words, we believe that the finding is real and not caused by errors in the way we chose the sample. We infer that it exists in the population as well as the sample.

Two things to note here:

- Statistical significance has to do with probability, not size. It’s not the same as saying, “Tom is significantly smarter than Joe”. A statistically significant difference may be quite small.

- Five per cent of the time – one time out of twenty – the finding is flat out wrong. Yikes! The five per cent threshold is generally used only in the social sciences. In the medical sciences, we normally use a one per cent or one-tenth of one per cent threshold to declare statistical significance.

Note that our finding – which is called a frequentist probability — represents a point in time. It’s essentially a snap shot. We noted that, if our study takes too long, conditions might change and invalidate the study. Indeed the Scientific American study cites data collected from 1992 to 1994. Perhaps conditions have changed since then. So how accurate is this?

That’s where Bayesian statistics come in to play. They allow us to add in new information as it becomes available. Let’s say, for instance, that women’s attitudes toward men who do house work evolve over time. We could factor in the new information and recalculate the probabilities.

Bayesian statistics are complicated and hard to compute. We’ve only been using them widely in the recent past as more powerful computers have become available. Still, they can help us work out complex problems that used to be way beyond our capabilities.

I’ll write more about Bayesian probabilities as I learn more. In the meantime, I’m going to sell the vacuum cleaner.