Brian Williams and Core Priorities

Values clarification.

In their book Decisive, Chip and Dan Heath write about the need to honor our core priorities when making decisions. They write that “An agonizing decision … is often a sign of a conflict among ‘core priorities’ … [T]hese are priorities that transcend the week or the quarter … [including] long-term goals and aspirations.”

To illustrate their point, the Heath brothers tell the story of Interplast*, the non-profit organization that recruits volunteer surgeons to repair cleft lips throughout the world. Interplast had some ”thorny issues” that caused contentious arguments and internal turmoil.

One seemingly minor issue was whether surgeons could take their families with them as they traveled to remote locations. The argument in favor: The surgeons were volunteering their time and vacations. It seems only fair to allow them to take their families. The argument against: The families distract the surgeon from their work and make it more difficult to train local doctors.

The argument was intense and divisive. Finally, one board member said to another, “You know, the difference between you and me is you believe the customer is the volunteer surgeon and I believe the customer is the patient.”

That simple statement led Interplast to re-examine and clarify its core priorities. Ultimately, Interplast’s executives resolved that the patient is indeed the center of their universe. Once that was clarified, the decision was no longer agonizing – surgeons should not take their families along.

I thought of Interplast as I read the coverage of Brian Williams’ situation at NBC. In much the same way as Interplast, NBC had to clarify its core priorities. The basic question is whom does NBC serve? Is it more loyal to Brian Williams or to its viewing audience?

In normal times, NBC doesn’t have to answer this question. It can support and promote its anchor while also serving its audience. In a crisis, however, NBC is forced to choose. It’s the moment of truth. Does the company support the man in whom it has invested so much? Or does it protect its credibility with the audience?

Ultimately, NBC sought to protect its credibility. I was struck by what Lester Holt said on his first evening on the air: “Now if I may on a personal note say it is an enormously difficult story to report. Brian is a member of our family, but so are you, our viewers and we will work every night to be worthy of your trust.”

Holt’s statement suggests to me that NBC’s core priority is credibility with the audience. I certainly respect that. It also struck me as being very similar to the question Interplast asked itself.

Clarifying your core priorities is never a simple task. Indeed, it may take a crisis to force the issue. But once you complete the task, everything else is simpler. As my father used to say: Decisions are easy when values are clear.

Here are links to two articles from the New York Times that report on how NBC executives reached their decisions regarding Brian Williams. (Click here and here)

*Interplast has been renamed ReSurge International. Its website is here.

Good Decisions, Bad Outcomes

Bad Decision or Bad Outcome?

The New England Patriots won the Super Bowl because their opponents, the Seattle Seahawks, made a bad decision. That’s what millions of sports fans in the United States believe this week.

But was it a bad decision or merely a bad outcome? We often evaluate whether a decision was good or bad based on the result. But Ronald Howard, the father of decision analysis, says that you need to separate the two. You can only judge whether a decision was good or bad by studying how the decision was made.

Howard writes that the outcome has nothing to do with the quality of the decision process. A good decision can yield a good result or a bad result. Similarly, a bad decision can generate a good or bad outcome. Don’t assume that the decision causes the result. It’s not so simple. Something entirely random or unforeseen can turn a good decision into a bad result or vice versa.

But, as my boss used to say, we only care about results. So why bother to study the decision process? We should study only what counts – the result of the process, not the process itself.

Well, … not so fast. Let’s say we make a decision based entirely on emotion and gut feel. Let’s also assume that things turn out just great. Did we make a good decision? Maybe. Or maybe we just got lucky.

During the penny stock market boom in Denver, I decided to invest $500 in a wildcat oil company whose stock was selling for ten cents a share. In two weeks, the stock tripled to 31 cents per share. I had turned my $500 stake into $1,500. I was a genius! (There’s a touch of confirmation bias here).

What’s wrong with this story? I assumed that I had made a good decision because I got a good outcome. I must be smart. But, really, I was just lucky. And you probably know the rest of the story. Assuming that I was a smart stock picker, I re-invested the $1,500 and – over the next six months – lost it all.

Today, when I evaluate stocks with the aim of buying something, I repeat a little mantra: “I am not a genius. I am not a genius.” It creates a much better decision process.

I was lucky and got a good outcome from a bad decision process. The Seattle Seahawks, on the other hand, got the opposite. From what I’ve read, they had a good process and made a good decision. They got a horrendous result. Even though their fans will vilify the Seahawks’ coaches, I wouldn’t change their decision process.

And that’s the point here. If you want good decisions, study the decision process and ignore the outcome. You’ll get better processes and better decisions. In the long run, that will tilt the odds in your favor. Chance favors the thoughtful decision maker.

What makes for a good decision process? I’ll write more about that in the coming weeks. In the meantime, you might like two video clips from Roch Pararye, a professor at the University of Pennsylvania, who explains why we separate decision processes from decision results. (Click here and here).

The Doctor Won’t See You Now

Shouldn’t you be at a meeting?

If you were to have major heart problem – acute myocardial infarction, heart failure, or cardiac arrest — which of the following conditions would you prefer?

Scenario A — the failure occurs during the heavily attended annual meeting of the American Heart Association when thousands of cardiologists are away from their offices or;

Scenario B — the failure occurs during a time when there are no national cardiology meetings and fewer cardiologists are away from their offices.

If you’re like me, you’ll probably pick Scenario B. If I go into cardiac arrest, I’d like to know that the best cardiologists are available nearby. If they’re off gallivanting at some meeting, they’re useless to me.

But we might be wrong. According to a study published in JAMA Internal Medicine (December 22, 2014), outcomes are generally better under Scenario A.

The study, led by Anupam B. Jena, looked at some 208,000 heart incidents that required hospitalization from 2002 to 2011. Of these, slightly more than 29,000 patients were hospitalized during national meetings. Almost 179,000 patients were hospitalized during times when no national meetings were in session.

And how did they fare? The study asked two key questions: 1) how many of these patients died within 30 days of the incident? and; 2) were there differences between the two groups? Here are the results:

- Heart failure – statistically significant differences – 17.5% of heart failure patients in Scenario A died within 30 days versus 24.8% in Scenario B. The probability of this happening by chance is less than 0.1%.

- Cardiac arrest — statistically significant differences – 59.1% of cardiac arrest patients in Scenario A died within 30 days versus 69.4% in Scenario B. The probability of this happening by chance is less than 1.0%.

- Acute myocardial infarction – no statistically significant differences between the two groups. (There were differences but they may have been caused by chance).

The general conclusion: “High-risk patients with heart failure and cardiac arrest hospitalized in teaching hospitals had lower 30-day mortality when admitted during dates of national cardiology meetings.”

It’s an interesting study but how do we interpret it? Here are a few observations:

- It’s not an experiment – we can only demonstrate cause-and-effect using an experimental method with random assignment. But that’s impossible in this case. The study certainly demonstrates a correlation but doesn’t tell us what caused what. We can make educated guesses, of course, but we have to remember that we’re guessing.

- The differences are fairly small – we often misinterpret the meaning of “statistically significant”. It sounds like we found big differences between A and B; the differences, after all, are “significant”. But the term refers to probability not the degree of difference. In this case, we’re 99.9% sure that the differences in the heart failure groups were not caused by chance. Similarly, we’re 99% sure that the differences in the cardiac arrest groups were not caused by chance. But the differences themselves were fairly small.

- The best guess is overtreatment – what causes these differences? The best guess seems to be that cardiologists – when they’re not off at some meeting – are “overly aggressive” in their treatments. The New York Times quotes Anupam Jena: “…we should not assume … that more is better. That may not be the case.” Remember, however, that this is just a guess. We haven’t proven that overtreatment is the culprit.

It’s a good study with interesting findings. But what should we do about them? Should cardiologists change their behavior based on this study? Translating a study’s findings into policies and protocols is a big jump. We’re moving from the scientific to the political. We need a heavy dose of critical thinking. What would you do?

Now You See It, But You Don’t

What don’t you see?

The problem with seeing is that you only see what you see. We may see something and try to make reasonable deductions from it. We assume that what we see is all there is. All too often, the assumption is completely erroneous. We wind up making decisions based on partial evidence. Our conclusions are wrong and, very often, consistently biased. We make the same mistake in the same way consistently over time.

As Daniel Kahneman has taught us: what you see isn’t all there is. We’ve seen one of his examples in the story of Steve. Kahneman present this description:

Steve is very shy and withdrawn, invariably helpful but with little interest in people or in the world of reality. A meek and tidy soul, he has a need for order and structure, and a passion for detail.

Kahneman then asks if it’s more likely that Steve is a farmer or a librarian?

If you read only what’s presented to you, you’ll most likely guess wrong. Kahneman wrote the description to fit our stereotype of a male librarian. But male farmers outnumber male librarians by a ratio of about 20:1. Statistically, it’s much more likely that Steve is a farmer. If you knew the base rate, you would guess Steve is a farmer.

We saw a similar example with World War II bombers. Allied bombers returned to base bearing any number of bullet holes. To determine where to place protective armor, analysts mapped out the bullet holes. The key question: which sections of the bomber were most likely to be struck? Those are probably good places to put the armor.

But the analysts only saw planes that survived. They didn’t see the planes that didn’t make it home. If they made their decision based only on the planes they saw, they would place the armor in spots where non-lethal hits occurred. Fortunately, they realized that certain spots were under-represented in their bullet hole inventory – spots around the engines. Bombers that were hit in the engines often didn’t make it home and, thus, weren’t available to see. By understanding what they didn’t see, analysts made the right choices.

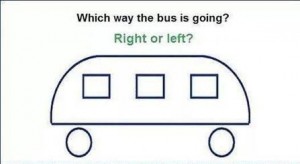

I like both of these examples but they’re somewhat abstract and removed from our day-to-day experience. So, how about a quick test of our abilities? In the illustration above, which way is the bus going?

Study the image for a while. I’ll post the answer soon.

Immoral Afternoons

Good advice.

I used to work for a CEO who had one hard and fast rule when it came to making decisions: he wouldn’t make any important decisions after 2:00 in the afternoon. I used to think it was an odd and unnecessary rule … but then I started counting calories.

My little food diary app keeps track of what I eat and lets me know if I’m getting too much of this or not enough of that. It’s interesting to keep track of what I consume. But what really surprised me was not what I eat so much as when I eat. I eat a well-balanced, healthy diet throughout the day. Then, around 7:00 at night, all hell breaks loose. On a typical day, I eat roughly 50% of my calories between 7:00 and 10:00 at night. If I went to bed at 7:00 pm, I’d be much healthier.

My experience reminded me of Daniel Kahneman’s story about the Israeli parole board. The default decision in any such hearing is to deny parole. To permit parole, the board has to find information that would point toward a positive outcome. In other words, it’s easy to deny parole. It takes more work – sometimes quite a bit more work – to grant parole.

Kahneman reports on a study that tracked parole decisions by time of day. Prisoners whose cases were considered just after lunch were more likely to win parole than those whose cases were considered before lunch. It all has to do with energy. Our brains consume huge amounts of energy. Making difficult decisions requires even more energy. Just before lunch, members of the parole board don’t have much energy. Just after lunch they do. So before lunch, they’re more likely to make the default decision. After lunch, they’re more capable of making the more laborious decision.

Energy doesn’t just affect decision making. It also affects will power (which, of course, is a form of decision making). When our bodies have sufficient stores of energy, we also have more will power. Why do I eat so many calories after 7:00 pm? Perhaps because my energy stores are at a low and, therefore, my will power is also at a low. It might be smarter to spread my calories more evenly throughout the day so my energy – and will power – don’t ebb.

Energy also affects ethics. A research study published in last month’s edition of Psychological Science is titled, “The Morning Morality Effect”. The researchers, Maryam Kouchaki and Isaac Smith, ran four experiments and found that people “… engaged in less unethical behavior … on tasks performed in the morning than in the same tasks performed in the afternoon.” In fact, “people were 20% to 50% more likely to lie, cheat, or otherwise be dishonest in the afternoon than in the morning.”

What’s the common denominator here? Energy depletion. As the day wears on and our energy levels drop, we’re less able to make complicated decisions or resist temptation. The parole board example suggests that we can combat this to some degree simply by eating. I wonder if taking a nap might also help – though I haven’t seen any research evidence of this.

Bottom line: my old boss was right. Make the tough decisions in the morning. Take it easy in the afternoon.